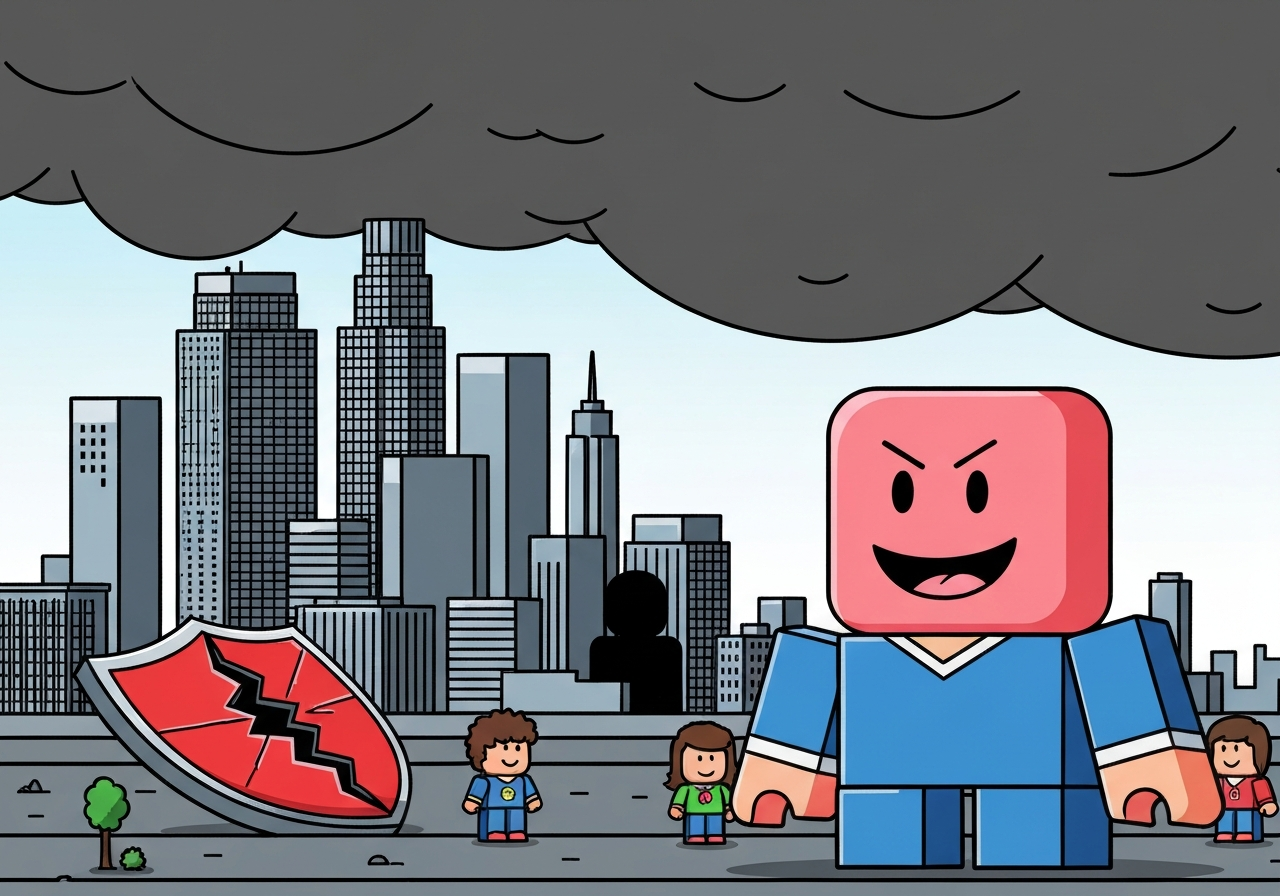

Lead: Los Angeles County filed a lawsuit on Thursday, 20 February 2026, accusing Roblox of exposing children to sexual content, grooming and exploitation by failing to moderate user-generated material and by operating inadequate age-verification systems. The county says the platform markets itself as safe for kids but, it alleges, its design and controls leave young users vulnerable. Roblox disputes the claims and says safety is central to its product. The case adds to growing legal pressure on major online platforms over youth safety and algorithmic risk.

Key Takeaways

- Los Angeles County filed the suit on 20 February 2026, alleging public nuisance and violations of California’s false advertising law.

- Roblox reports roughly 144 million daily active users globally, with over 40% estimated to be under 13 years old.

- The complaint alleges failures in content moderation, age-restriction enforcement and full disclosure of sexual-content risks on the platform.

- County officials contend platform design facilitates grooming, exploitation and even physical assaults following online contact.

- Roblox rejects the allegations, saying it has built safety protections and disables image sharing in chat to reduce misuse.

- A 2024 Hindenburg Research report criticized Roblox for exposing children to sexual content, and Australia sought urgent talks with the company this month about child safety.

- The lawsuit follows broader litigation in Los Angeles targeting social media firms for harms to young people tied to algorithmic engagement.

Background

Roblox launched as a user-driven gaming and creation platform where players design and play experiences using avatars and chat. Its freemium model lets users access content for free while offering a virtual currency for in-game purchases. The platform’s combination of live chat, user-created worlds and a predominantly child user base has raised safety questions for years.

Regulatory and advocacy attention ramped up after investigative reports and watchdog findings alleged persistent exposure of minors to explicit material and grooming. A 2024 report from Hindenburg Research framed the platform as a locus of sexual risks to children, intensifying scrutiny from governments and lawmakers. Platforms that rely on large amounts of user-generated content face technical and resource hurdles when trying to filter all problematic interactions in real time.

Main Event

On 20 February 2026, the Los Angeles County Counsel’s office filed a complaint accusing Roblox of public nuisance and false advertising, asserting the company markets itself as safe for kids while its systems permit predators to target children. The complaint argues that moderation tools and age checks are inadequate and that the company has understated the scope of sexual content and grooming on its services.

County leaders, including Board of Supervisors chair Hilda Solis, framed the action as a child-protection measure aimed at forcing stronger safety practices. The complaint quotes a county lawyer describing the platform’s design as making children “easy prey” for pedophiles and asks the court to address systemic failures rather than isolated incidents.

Roblox responded through public statements and comments to news agencies, saying it built safety into the product, employs advanced detection systems and blocks image transfers in chat. The company also noted it acts against accounts that breach rules and cooperates with law enforcement, while acknowledging no system is perfect and that safety work is ongoing.

Analysis & Implications

The lawsuit targets structural design choices and promises on safety rather than only individual bad actors. If the county prevails, it could set a precedent allowing local governments to seek remedies for alleged platform design harms beyond traditional content takedown requests, amplifying legal exposure for firms hosting child-focused, user-generated ecosystems.

For Roblox, potential legal and reputational consequences include statutory penalties, mandated product changes, expanded monitoring obligations, and increased compliance costs. Those outcomes could influence the product roadmap—tightening chat, limiting certain user interactions, or altering monetization features to reduce risk vectors.

More broadly, the case underscores a regulatory trend: authorities are moving from asking platforms to remove specific content toward contesting the business models and technical architectures that create risk. Other companies hosting large youth audiences may face similar scrutiny, prompting industry-wide investments in safety tech and policy changes in-app and at the legislative level.

Economically, higher compliance and moderation demands could raise operating costs and slow feature development. Conversely, demonstrable safety improvements could strengthen parental trust and long-term platform resilience. Litigation outcomes will also inform how courts interpret claims of false advertising and public nuisance against digital services.

Comparison & Data

| Metric | Roblox (reported) | Context / Source Year |

|---|---|---|

| Daily active users | 144 million | Company report, 2026 |

| Share under 13 | Over 40% | Company estimate, 2026 |

| Notable critical report | Hindenburg Research | 2024 |

| Lawsuit file date | 20 February 2026 | LA County filing |

The table highlights basic figures and milestones central to the complaint and ongoing debate. While Roblox’s user numbers and age composition are drawn from company disclosures, allegations about content exposure derive from investigative reports and the county’s legal filing. Quantifying the frequency and severity of grooming or exploitation on a platform of this scale remains difficult without comprehensive, independently audited data.

Reactions & Quotes

This lawsuit is about protecting children from online predators and inappropriate content.

Hilda Solis, Los Angeles County Board of Supervisors chair (public statement)

Context: Solis framed the legal action as a public-safety intervention, emphasizing the county’s responsibility to act on alleged systemic risks to minors.

The design of the platform makes children easy prey for pedophiles, and the trauma that results is horrific.

Dawyn R. Harrison, Los Angeles County Counsel (complaint summary)

Context: The county counsel’s office characterizes the suit as addressing structural failures rather than isolated moderation lapses and highlights potential harms including grooming and exploitation.

We built our platform with safety at its core and employ advanced safeguards; users cannot send or receive images via chat.

Roblox spokesperson (company statement)

Context: Roblox emphasized technical measures—such as disabling image sharing in chat—and cooperation with law enforcement to rebut the county’s claims.

Unconfirmed

- The full scope and frequency of grooming incidents referenced in the complaint are described by the county but lack independently released, detailed incident-level data in the public record.

- Claims about specific cases of assault allegedly resulting from Roblox interactions are summarized in the filing; not all referenced incidents have been publicly corroborated in court filings available to the press.

- The effectiveness and coverage of Roblox’s internal moderation systems have been asserted by both parties; independent audits quantifying missed harms are not publicly available.

Bottom Line

Los Angeles’s lawsuit marks a significant escalation in how local governments hold online platforms accountable for child safety. By framing the issue as one of platform design and advertising, the county is seeking remedies that could force deeper operational changes than standard content takedowns or account bans.

For parents, policymakers and platform operators, the case highlights persistent tensions: how to preserve creative, social experiences for young users while preventing exploitation. The legal outcome will likely influence product policy, regulatory approaches and expectations around transparency and independent verification of safety claims.

Sources

- The Guardian — Media report on the lawsuit and reactions (news)

- Hindenburg Research — Investigative report alleging child-safety failures (research report, 2024)

- Roblox Corporation — Company statements on safety measures and policies (official)